export data from redshift to s3|Setting up Redshift Data Lake Export: Made Easy 101 : Baguio SQLDataNode would reference your Redshift database and SELECT query to use to extract your data. S3DataNode would point to S3 path to be used to store your . Watch Hayden Kho Maricar Reyes Scandal porn videos for free, here on Pornhub.com. Discover the growing collection of high quality Most Relevant XXX movies and clips. No other sex tube is more popular and features more Hayden Kho Maricar Reyes Scandal scenes than Pornhub! Browse through our impressive selection of porn videos in HD .

PH0 · Unloading data to Amazon S3

PH1 · Unloading a file from Redshift to S3 (with headers)

PH2 · Unloading Data from Redshift to S3 – Data Liftoff

PH3 · UNLOAD

PH4 · Tutorial: Loading data from Amazon S3

PH5 · Setting up Redshift Data Lake Export: Made Easy 101

PH6 · Scheduling data extraction from AWS Redshift to S3

PH7 · How do I export tables from redshift into Parquet format?

PH8 · Export data from AWS Redshift to AWS S3

PH9 · Export JSON data to Amazon S3 using Amazon Redshift UNLOAD

PH10 · Amazon Redshift to S3: 2 Easy Methods

Sekarang, kamu bisa masuk ke McDelivery™ dengan menggunakan akun aplikasi McDonald's. Dengan login tunggal, kamu dapat mengumpulkan loyalty poin setiap pemesanan sambil menikmati akses cepat untuk penawaran eksklusif dan promosi. MASUK DENGAN APLIKASI MCDONALD'S. BELUM PUNYA APLIKASI?

export data from redshift to s3*******UNLOAD automatically encrypts data files using Amazon S3 server-side encryption (SSE-S3). You can use any select statement in the UNLOAD command that Amazon Redshift supports, except for a select that uses a LIMIT clause in the outer select.You can unload the result of an Amazon Redshift query to your Amazon S3 data .You can load from data files on Amazon S3, Amazon EMR, or any remote host .export data from redshift to s3 You can use this feature to export data to JSON files into Amazon S3 from your Amazon Redshift cluster or your Amazon Redshift Serverless endpoint to make .

SQLDataNode would reference your Redshift database and SELECT query to use to extract your data. S3DataNode would point to S3 path to be used to store your . Method 1: Unload Data from Amazon Redshift to S3 using the UNLOAD command. Method 2: Unload Data from Amazon Redshift to S3 in Parquet Format. .

You can unload the result of an Amazon Redshift query to your Amazon S3 data lake in Apache Parquet, an efficient open columnar storage format for analytics. Parquet format .

You can load from data files on Amazon S3, Amazon EMR, or any remote host accessible through a Secure Shell (SSH) connection. Or you can load directly from an Amazon .Setting up Redshift Data Lake Export: Made Easy 101 The UNLOAD command is quite efficient at getting data out of Redshift and dropping it into S3 so it can be loaded into your application database. Another common . This way, Redshift can offload the cold data to the S3 storage. These 2 services i.e, Amazon Redshift and Amazon S3 can come together to optimize the .

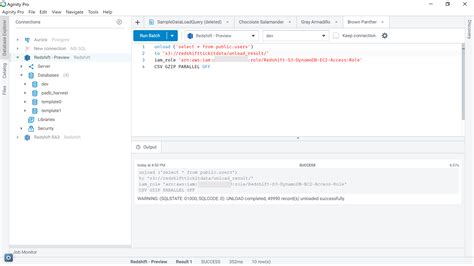

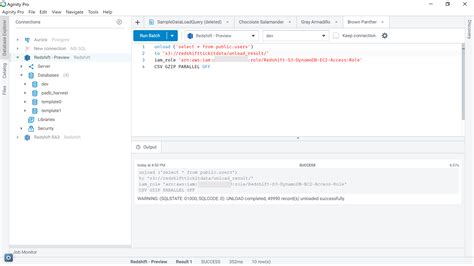

A few days ago, we needed to export the results of a Redshift query into a CSV file and then upload it to S3 so we can feed a third party API. Redshift has already . After we added column aliases, the UNLOAD command completed successfully and files were exported to the desired location in Amazon S3. The following screenshot shows data is unloaded in .

Amazon Redshift To Amazon S3 transfer operator. This operator loads data from an Amazon Redshift table to an existing Amazon S3 bucket. To get more information about this operator visit: RedshiftToS3Operator. Example usage: You can find more information to the UNLOAD command used here.

After using Integrate.io to load data into Amazon Redshift, you may want to extract data from your Redshift tables to Amazon S3. There are various reasons why you would want to do this, for example: You want to load the data in your Redshift tables to some other data source (e.g. MySQL) To better manage space in your Redshift cluster, . Read data from Amazon S3, and transform and load it into Redshift Serverless. Save the notebook as an AWS Glue job and schedule it to run. Prerequisites. For this walkthrough, we must complete the following prerequisites: Upload Yellow Taxi Trip Records data and the taxi zone lookup table datasets into Amazon S3. Steps to do that .

ETL (Extract, Transform, Load) Job : An ETL job is a data integration process that involves three main stages; Extract: Retrieving data from one or more sources, such as databases, files, or APIs .

How to Export Data from Redshift. The COPY command is the most common and recommended way for loading data into Amazon Redshift. Similarly, Amazon Redshift has the UNLOAD command, which can be used to unload the result of a query to one or more files on Amazon S3. The data is unloaded in CSV format, and there’s a number of .

Before you export DB snapshot data to Amazon S3, give the snapshot export tasks write-access permission to the Amazon S3 bucket. To grant this permission, create an IAM policy that provides access to the bucket, then create an IAM role and attach the policy to the role. You later assign the IAM role to your snapshot export task. Spark streaming back into s3 using Redshift connector; UNLOAD into S3 gzipped then process with a command line tool; Not sure which is better. I'm not clear on how to easily translate the redshift schema into something parquet could intake but maybe the spark connector will take care of that for me.

We would like to show you a description here but the site won’t allow us.The local pizza brand, established in 1971 by entrepreneur Cresida Tueres and taken over by Jollibee in 1994, is leveling up to compete with foreign pizza parlors. Cuadrante says the new name and the new look are in response to the ongoing rave in the food scene led by millennials and Generation X who have grown together with the brand.

export data from redshift to s3|Setting up Redshift Data Lake Export: Made Easy 101